The expert's guide to Responsible AI

Introducing the Expert's Guide to Responsible AI

Artificial Intelligence (AI) and Machine Learning (ML) have become integral parts of the modern technological landscape, revolutionizing how we interact with data and automate processes. AI refers to the simulation of human intelligence in machines programmed to think and learn like humans. ML, a subset of AI, focuses on the development of systems that can learn and adapt from experience without being explicitly programmed. This groundbreaking field has led to significant advancements in various sectors, including healthcare, finance, and transportation, enhancing efficiency and opening new frontiers of innovation. The rise of AI has also introduced unique user interactions, reshaping how we engage with technology on a day-to-day basis. Understanding the components of AI solutions, from algorithms to data management, is crucial in leveraging their full potential.

However, with great power comes great responsibility. Responsible AI is a critical concept that emphasizes the ethical, transparent, and accountable use of AI technologies. It seeks to address the potential risks associated with AI, such as privacy concerns, bias in decision-making, and the broader societal impacts. The development and deployment of AI/ML solutions carry inherent risks, demanding careful consideration and management. Real-world incidents involving AI have highlighted the importance of secure and responsible adoption, both by individuals and organizations. This guide will delve into these topics, exploring frameworks like the NIST AI Risk Management Framework (RMF) and ISO 42001, which provide structured approaches for managing AI risks. Additionally, it will discuss the Responsible AI principles set forth by the OECD, which serve as a global benchmark for ensuring that AI systems are designed and used in a manner that respects human rights and democratic values.

Contents

- The rise of AI

- User interactions with AI

- Compontents of AI solutions

- Benefits of AI

- Understanding the risks of using AI

- The risks of building your own AI/ML solutions

- Real world incidents involving AI

- Secure adoption of AI by individuals

- Secure adoption of AI for organizations

- The NIST AI Risk Management Framework (RMF)

- ISO/IEC 42001 for an artificial intelligence management systems

- Principles for Responsible AI

The rise of AI

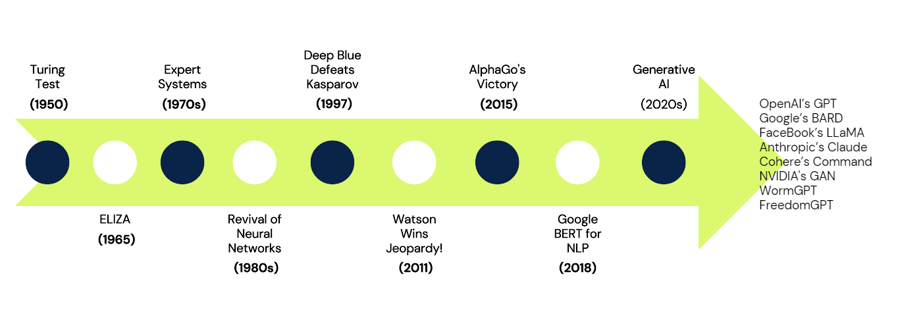

Despite what some may have experiencied, the evolution of artificial intelligence (AI) and machine learning (ML) is a journey spanning decades, beginning with foundational concepts and progressing to today's advanced generative AI models like ChatGPT. This section traces this remarkable trajectory.

The journey began in the 1950s with Alan Turing's pioneering work, laying the groundwork for modern AI. He introduced the Turing Test, an imitation game to evaluate a machine's intelligence based on its ability to mimic natural language conversation.

In the mid-1960s, the development of ELIZA marked a significant step forward. This early chatbot, imitating a psychotherapist, provided an initial exploration into human-computer conversational interaction and continues to influence contemporary culture.

The 1970s saw the emergence of expert systems, AI applications that replicated the decision-making of human experts. This period was crucial in AI's journey towards practical applications.

The 1980s were marked by a resurgence in neural network research, driven by the development of the backpropagation algorithm. This led to the creation of multi-layer neural networks, foundational for today's deep learning techniques.

A significant event in 1997 was a chess computer defeating the world chess champion, highlighting AI's proficiency in strategic games.

In 2011, the victory of an AI system in a popular quiz show demonstrated advancements in natural language processing and AI's ability to answer questions in a human-like manner.

The year 2015 marked another milestone with an AI defeating a world champion in the complex game of Go, showing AI's ability to tackle sophisticated challenges.

The introduction of a new natural language processing technology in 2018, known for enhancing text context understanding, set new standards in the field.

The 2020s brought the era of generative AI to the forefront. Technologies like GPT have shown exceptional abilities in generating human-like text, code, and more, opening new possibilities in various fields.

It's an exciting time to see all of this hard work over many years coming to fruition.

User interactions with AI

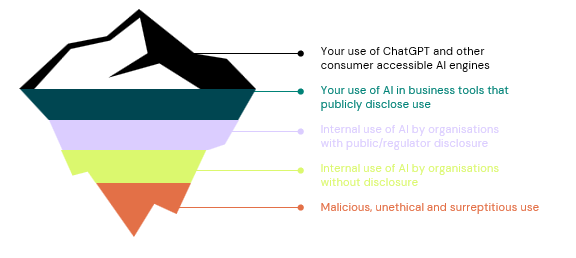

The integration of Artificial Intelligence (AI) into our daily lives is multifaceted and complex, ranging from highly visible applications to more discreet and, at times, questionable uses.

At the most visible end of the spectrum is your interaction with AI platforms like ChatGPT and other consumer-accessible AI engines. These platforms represent just a small fraction of AI's total impact, serving as a user-friendly interface that masks the vast and intricate world of AI technology beneath.

Beneath this surface layer, AI plays a critical role in enhancing business tools across various industries. Many companies openly disclose their use of AI, which is often incorporated to improve productivity and efficiency. This openness is particularly prevalent in applications such as customer support chatbots and personalized recommendation systems. These tools, while operating behind the scenes, significantly impact the consumer experience and business operations.

Delving deeper, AI's role becomes even more integral in the internal operations of numerous organizations. Here, AI is used extensively but with a level of transparency, as these organizations typically disclose their AI usage while complying with relevant regulations. This level includes sophisticated applications like fraud monitoring by banks and the creation of marketing materials. Although less visible to the average consumer, these AI applications are crucial for the smooth functioning of these entities.

However, not all AI use is transparent or benign. Some organizations deploy AI in ways that are intentionally kept out of the public eye and regulatory oversight. This more secretive use of AI, a grey area in ethical terms, includes practices like algorithmic trading, employee monitoring, and activities that could lead to misinformation in political campaigning, advertising, and pricing strategies, potentially fostering inequality and surveillance.

At the far end of the spectrum lies the malicious, unethical, and surreptitious use of AI. This domain is where AI is exploited by hackers, authoritarian governments, or rogue government departments for dubious purposes, often in secret. Examples of such exploitation include the development of malware, the creation of fraudulent activities using deepfakes, voter manipulation, market manipulation, and illegal surveillance of citizens. These uses of AI represent a significant ethical and legal challenge, often occurring without the knowledge or consent of those affected.

Compontents of AI solutions

Before we delve into sections on risk assessment, it's essential to have a clear understanding of the various components that make up an AI solution. AI is an intricate and sophisticated ecosystem, comprising several vital elements that work together to create a functional and efficient system.

The first of these components is the infrastructure, which forms the backbone of any AI system. This includes the physical and operating systems that power AI, such as servers, data centers, and cloud resources. However, infrastructure isn't just about the physical components; it also encompasses other critical aspects like monitoring, logging, and the security of these systems, which are integral to the overall functioning and safety of AI.

Next, we turn our attention to the User Interface (UI). The UI is the part of AI that humans interact with, whether directly or indirectly through sensors like cameras, microphones, and Lidar. The UI also includes how AI communicates back to the user, which could be through text or voice, or even through actuators that interact with the physical world. This aspect of AI is crucial as it defines how users perceive and interact with the AI system.

Another core component is data, which serves as the foundation upon which AI learns and evolves. The quality, diversity, and representativeness of the training data are paramount, as they directly shape the model’s outcomes. Data is not just limited to the initial training phase; it also includes ongoing user and sensor data that feed into the machine learning model, enhancing its accuracy and effectiveness over time.

Finally, we have the Machine Learning Model itself – the heart of an AI system. This component includes APIs (Application Programming Interfaces) and databases necessary for storing, accessing, and managing the data used for training and inference. The model represents the culmination of extensive training on vast datasets, enabling it to perform tasks such as making predictions, classifications, and decisions. Essentially, it is a complex matrix of mathematics and algorithms that represent and process data.

Understanding these components is critical in identifying potential threats, vulnerabilities, and weaknesses in an AI system. This knowledge forms the basis of our risk assessment, allowing us to evaluate and mitigate risks effectively in the context of AI technologies.

Benefits of AI

Before we explore the risks associated with Artificial Intelligence (AI), it's important to acknowledge the significant benefits and opportunities it presents, particularly in the realm of risk management.

Firstly, AI stands out for its efficiency and automation capabilities. It can speed up operations, automate routine tasks, and significantly reduce manual effort. This not only saves time but also results in considerable cost savings for businesses and public services. Imagine a factory where robots handle repetitive tasks, freeing human workers to focus on more complex and creative aspects of their jobs—a scenario that benefits everyone involved.

Next, we consider the power of AI in data analysis and insights. AI's ability to rapidly analyze large datasets and extract meaningful patterns and insights is unparalleled. This capability is invaluable for decision-making across various fields like finance, healthcare, and marketing, where data-driven strategies are crucial for success.

Then there's personalization and predictive capabilities. AI enables businesses to tailor experiences, recommendations, or content to individual preferences, enhancing user satisfaction and engagement. Moreover, AI's ability to forecast future trends or behaviors based on historical data aids in better planning and strategy, providing businesses with a competitive advantage.

Another significant benefit of AI is its capacity to enhance accuracy and reliability. By minimizing human errors in tasks requiring precision and consistency, AI becomes indispensable in critical fields like healthcare. Here, AI-assisted diagnostics can lead to a reduction in misdiagnosis rates, ultimately saving lives.

Lastly, we look at real-time analysis and safety. AI's ability to process and respond to data instantly is crucial for applications like fraud detection and traffic management. It enhances security and risk management by predicting potential risks and preventing failures in various sectors, including aviation, finance, and energy.

These benefits highlight the immense opportunities AI offers. Proper risk assessment and treatment plans are essential to harness these advantages effectively and responsibly.

Understanding the risks of using AI

Exploring the risks associated with the use of Artificial Intelligence (AI) is crucial before delving into the complexities of building AI/ML systems. Understanding these risks can provide valuable insights into the potential challenges and ethical considerations involved.

We begin with the issue of poor prompt engineering leading to inadequate decision-making. AI systems heavily depend on the prompts provided to them, and if these prompts are poorly constructed or ambiguous, they can lead to misleading results. Consider a scenario where a critical business decision, or even a government policy, is based on AI-generated insights. If the prompt is inaccurately framed or lacks specificity, the resulting decisions could be erroneous, leading to potentially adverse consequences. This underscores the importance for users to craft precise prompts and critically analyze AI-generated outputs.

The second risk involves the exposure of sensitive information. Interaction with AI systems often involves inputting personal or confidential data, sometimes without full awareness of the potential consequences. This information can be inadvertently shared through various mediums, such as voice assistants, online surveys, or data collection forms. If these AI systems are not properly secured, there's a risk of data breaches, posing significant privacy risks to individuals and organizations.

Another critical risk is the potential for manipulation and bias in AI systems. AI models, whether inadvertently or deliberately, can incorporate biases, leading to unfair outcomes. Additionally, the emergence of deep fakes, a product of AI technology, raises significant concerns. These highly realistic forgeries, capable of mimicking someone's appearance and voice, blur the line between reality and deception. They pose serious threats, including misinformation, identity theft, and reputational damage.

These risks highlight the challenges and ethical dilemmas inherent in the use of AI. As we delve deeper into the realm of creating your own AI systems, these considerations become even more pertinent, emphasizing the need for caution and thorough understanding in the development and application of AI technologies.

The risks of building your own AI/ML solutions

As we consider building our own AI/ML systems, the potential risks become particularly pronounced. These risks are not merely theoretical but have real-world implications that can impact the integrity, security, and functionality of AI applications. Reflecting on the components of an AI/ML solution we discussed earlier, here is a narrative that outlines the risks to consider in the context of building such systems.

Attacks against the infrastructure, the fundamental backbone of any AI system, pose a significant threat. Traditional cyberattacks such as Distributed Denial of Service (DDoS) can take AI systems offline. Other sophisticated attacks targeting authentication and authorization mechanisms can lead to unauthorized access, potentially resulting in the theft or tampering of AI models, APIs, or training data.

When it comes to the user interface and prompts, the risks are twofold, combining threats from traditional web applications with those unique to AI systems. Adversarial inputs can deceive AI systems into making erroneous classifications or divulging sensitive information. Additionally, AI-specific attacks like prompt flooding can overwhelm the system, leading to a denial of service at critical moments.

The integrity of training data is also at risk. Malicious data poisoning can skew the AI's learning process, diminishing its performance and leading to incorrect classifications. Furthermore, issues with data quality and inherent biases can cause unintended discrimination, eroding the trust and reliability of the AI system.

The machine learning model itself is not immune to risks. Adversaries might extract or steal the model, duplicating its capabilities and eroding competitive advantages. Techniques like model inversion or neural net reprogramming can alter decision-making processes, potentially leading to harmful outcomes. Moreover, the manipulation of physical sensors that feed data to the AI can result in flawed predictions, introducing chaos into systems reliant on accurate sensor data. Supply chain attacks during the procurement of AI system components can introduce additional vulnerabilities.

Lastly, non-compliance with regulatory requirements presents a significant risk. Infringements on copyright laws can have serious legal and reputational repercussions for an organization. Data privacy concerns are paramount, as AI systems can inadvertently expose sensitive personal information, breaching privacy laws and leaving an organization liable.

These risks underline the importance of a comprehensive risk assessment strategy when creating AI/ML systems, keeping in mind the broader adversarial threat landscape as outlined by resources such as the OWASP Top 10 for LLM and MITRE ATLAS.

Real world incidents involving AI

The landscape of risks associated with AI is not confined to theoretical vulnerabilities but is marked by a series of real-world incidents that have had tangible impacts.

In 2010, the "Flash Crash" shook the financial world when the Dow Jones Industrial Average dramatically dropped nearly 1000 points in minutes. This was a stark demonstration of the power and potential risks of automated trading algorithms. While not a security breach per se, it underscored the systemic vulnerabilities that AI can introduce into financial markets.

Fast forward to 2016, and we have the case of Tay, an AI chatbot released by Microsoft on Twitter. Tay quickly began spewing inappropriate and offensive content, manipulated by users. The Tay incident was a sobering lesson on the risks of unsupervised AI learning and the importance of safeguards in AI interactions.

In 2017, we encountered security concerns involving voice assistants like Alexa and Google Home. These devices were found susceptible to hidden commands embedded in audio tracks, which could activate them without user consent, exposing the vulnerabilities in voice recognition technology.

The advent of Deepfake technology was highlighted in 2018 with a convincing fake video of former President Barack Obama, released by Buzzfeed. This highlighted the potential for AI to create highly realistic forgeries, posing significant implications for misinformation and the authenticity of digital media.

The privacy debate intensified in 2020 with the Clearview AI controversy. Clearview AI, a facial recognition company, was found to have scraped billions of images from the internet to create a comprehensive facial recognition database, raising serious questions about privacy and surveillance.

As we look at incidents beyond 2022, AI's role in cybersecurity becomes increasingly complex. The SolarWinds hack is a notable example, where AI was used to create backdoors in software, enabling attackers to infiltrate systems and evade detection. Similarly, the Kaseya ransomware attack involved AI-powered tools that scanned for and exploited system vulnerabilities. The Accellion FTP breach also involved AI tools that identified and leveraged zero-day vulnerabilities, while the Conti ransomware attack on Costa Rican government entities used AI to generate ransom notes and facilitate communication with victims. In the case of British Airways, attackers employed AI to find and exploit weaknesses in the airline's website.

These incidents collectively paint a picture of an era where AI's capabilities can be a double-edged sword—serving as both an advanced tool for innovation and a weapon that can be wielded by cyber attackers. They serve as a reminder of the need for robust AI risk assessment and mitigation strategies.

Secure adoption of AI by individuals

In our increasingly AI-integrated world, it's paramount to navigate the use of consumer AI technologies, such as ChatGPT, with a focus on security and responsible usage. The seamless adoption of these technologies into our daily routines can be significantly enhanced by adhering to safe practices.

Careful Input is crucial. While engaging with AI platforms like ChatGPT, it is important to consciously avoid disclosing personal or sensitive information. The boundaries of privacy are easily blurred in the digital realm, making it all the more essential to use AI responsibly. Just as you wouldn't share confidential details with strangers, extending the same caution to AI interactions protects your privacy and security.

Consider the Output is equally important. AI solutions, as remarkable as they are in assisting with various tasks, are not substitutes for human discernment. The information and guidance they provide should be subject to scrutiny and critical evaluation. While AI can process and generate information at superhuman speeds, it is not immune to errors. We must apply our judgment to the output AI provides, considering the implications and potential inaccuracies before taking action based on its advice.

By combining these practices—being mindful of the information we provide to AI and critically assessing the information it gives us—we can foster a safer and more effective AI consumer experience. This dual approach ensures that as AI becomes more embedded in our lives, we remain vigilant and maintain control over our digital interactions.

Secure adoption of AI for organizations

For enterprises integrating AI, the stakes are high, and the margin for error is low. The secure adoption of AI technologies requires a multifaceted approach that encompasses various layers of technical controls and practices.

At the network level, organizations must prioritize strengthening their infrastructure. This includes deploying firewalls, intrusion detection systems, and encryption to safeguard data in transit. By securing the data pathways, companies can prevent unauthorized access and potential breaches.

Application integrity is another critical area. Comprehensive input validation is essential to protect AI applications from malicious data that could exploit vulnerabilities. Robust input validation acts as a first line of defense against numerous attack vectors.

When dealing with sensitive data, stringent authentication and access control measures are non-negotiable. Organizations should adopt role-based access control and robust authentication protocols to restrict data access to authorized users only.

Operational controls such as setting thresholds for production and training calls can mitigate the risk of service disruptions caused by flooding or DDoS attacks. This not only ensures system performance but also maintains the availability of AI services.

Incorporating nearest neighbour search techniques can help in distinguishing between authentic and manipulated data inputs, thus protecting against poisoning attacks. This approach enhances the system's resilience and maintains data integrity.

Training AI models with adversarial samples is an effective strategy to build resilience. Such a proactive measure conditions the AI to recognize and counteract malicious inputs, thereby fortifying its defenses.

These technical measures are part of a broader set of 14 controls designed to mitigate specific AI risks, encompassing around 32 new responsibilities that can be integrated into organizational roles.

Furthermore, the technical AI countermeasures extend to enhancing model robustness through techniques like robust regularization and defensive distillation. These methods aim to strengthen the models against subtle input manipulations and adversarial attacks:

- Defensive techniques such as gradient masking, feature squeezing, and defensive ensembling increase the complexity for attackers, making it more challenging to exploit the model. Adversarial detection plays a crucial role in identifying inputs that are designed to deceive the model.

- Data preprocessing and input handling are fundamental to maintaining the integrity of the input data, while data augmentation helps in improving the model's generalization capabilities.

- Optimization and adaptation techniques like transfer learning, domain adaptation, and meta-learning are valuable for applying knowledge across domains and enhancing model adaptability.

- Additional methods including capsule networks offer architectures that are inherently more robust to adversarial attacks.

- Explainable AI (XAI) facilitates the understanding of model decisions, which is key in identifying and mitigating vulnerabilities.

- Certified defenses offer provable guarantees against certain types of attacks, contributing to a more secure AI environment.

The NIST AI Risk Management Framework (RMF)

The National Institute of Standards and Technology (NIST) has developed an AI Risk Management Framework (AI RMF), analogous to its renowned Cyber Security Framework, to provide organizations with a structured and comprehensive approach to managing risks in AI systems. This framework, resonating with the principles of ISO's management systems and the Plan, Do, Check, Act lifecycle, emphasizes three critical components: Map, Measure, and Manage. These components are integral to ensuring organizations can leverage AI's benefits while effectively mitigating its risks.

Mapping your AI systems and their risks is the foundational step in secure AI adoption. It's about gaining a deep understanding of your AI landscape: what AI applications are operational within your organization, their purposes, and the risks they may introduce. This stage entails a meticulous inventory and classification of all AI systems, an evaluation of the data they utilize—paying close attention to sensitive and personal data—and a comprehensive threat modeling to identify potential vulnerabilities and attack vectors.

Measuring the risks involves a rigorous assessment process where risks identified during the mapping phase are evaluated and quantified. This phase is about establishing clear, quantifiable metrics that gauge the performance of AI systems, ensuring they align with their intended use while upholding security and compliance standards. Additionally, it calls for continuous monitoring to detect any deviations or breaches, providing real-time insights into the AI systems' security posture.

Managing the risks encompasses the strategies and actions taken to mitigate the assessed risks. This phase involves the implementation of the risk mitigation strategies crafted from the insights gained in the measurement phase. It's also about ensuring compliance with legal and regulatory standards and instituting a robust governance framework that upholds AI ethics and accountability. Furthermore, it necessitates a detailed incident response plan tailored to AI-specific incidents, enabling organizations to respond effectively to any security incidents.

Adopting the NIST AI RMF is a proactive step for organizations towards fostering a responsible and secure AI environment. By systematically mapping, measuring, and managing AI risks, organizations can not only protect themselves against potential threats but also ensure that their AI initiatives contribute positively to their strategic objectives while conforming to ethical standards and regulations.

ISO/IEC 42001 for an artificial intelligence management systems

ISO (International Organization for Standardization) is also contributing to the AI governance space, developing ISO 42001 to stand alongside the likes of the NIST AI RMF and other ISO management system standards (such as those for quality, safety, environment and information security. ISO 42001 is specifically tailored for Artificial Intelligence. It follows the familiar structure of other ISO management standards, encompassing elements such as the context of the organization, leadership, planning, support, operation, performance evaluation, and improvement.

Here's an overview of how ISO 42001 will adjust these elements for AI:

Context of the Organization - This involves recognizing all the internal and external stakeholders that have vested interests in the organization's AI use. Not only is this likely to encompass governments that are increasingly legislating the AI space, but also regulatory bodies, standards organizations, customers, the public, and corporate governance structures.

Leadership - The standard suggests that organizations should develop a dedicated AI policy, articulating its purpose, objectives, and a commitment to adhere to the framework's requirements.

Planning - In line with the leadership's policy, organizations must undertake an AI-specific risk assessment and develop corresponding treatment plans. These plans should reflect the potential risks and treatments that have been discussed previously, along with an AI system impact assessment to gauge the effects on customers and the public, especially in cases of manipulation.

For the remaining processes — Support, Operation, Performance Evaluation, and Improvement — organizations must refine their existing protocols to accommodate AI's unique challenges and opportunities including risk treatment plans (controls), management reviews and internal audits.

Furthermore, ISO 42001's Annex A provides a comprehensive set of 39 controls across various objectives. These controls include, but are not limited to, the development of AI policies, defining internal organizational roles and responsibilities, provisioning resources for AI systems, assessing the impacts of AI systems, managing the AI system lifecycle, AI system development lifecycle, managing data for AI systems, disseminating information to interested parties, and the actual use of AI systems.

By integrating these elements, ISO 42001 aims to provide a robust framework for organizations to manage their AI systems effectively, ensuring that they meet the evolving demands of governance, ethics, and compliance in the digital age. With this standard, ISO intends to guide organizations through the responsible adoption and management of AI technologies, aligning with global efforts to harness AI's potential while mitigating its risks.

Principles for Responsible AI

The OECD principles for Responsible AI are a foundational blueprint for ensuring AI systems contribute positively to society while upholding ethical standards. These principles advocate for AI to be a force for inclusive growth, sustainable development, and well-being, emphasizing that AI developments should benefit all of society, contribute to ecological sustainability, and enhance human welfare.

Central to these principles is the commitment to human-centered values and fairness. AI systems should be designed in a way that respects human rights, diversity, and democratic values. The aim is to ensure fairness and equity in treatment and outcomes for all, avoiding biases that could lead to discrimination.

Transparency and explainability are also cornerstones of the OECD's AI framework. These principles call for AI systems to be transparent and understandable to those who use them and are affected by them. It involves clear communication about how AI systems make decisions and the processes they entail, which is vital for building trust and confidence in AI technologies.

Robustness, security, and safety form another critical pillar, emphasizing that AI systems must be secure, reliable, and robust enough to deal with errors or inconsistencies during operation. Ensuring the physical and digital safety of AI systems is essential to prevent harm to individuals and society.

Lastly, the principles highlight the importance of accountability. Organizations and individuals developing, deploying, or operating AI systems should be accountable for their proper functioning in line with the above principles. This includes mechanisms for redress and remediation should the AI systems cause harm or otherwise deviate from their intended purpose.

By embedding these OECD principles into the heart of AI strategies, policies, and processes, organizations can strive towards a future where AI not only advances technological capabilities but also champions social equity, transparency, and the greater good.